AWS re:Invent 2025: Bedrock AgentCore—The Trust Layer for Enterprise AI

There was an abundance of announcements, hype, and “Agentic AI” buzzords at AWS re:Invent 2025. The really big news was that Amazon Bedrock has evolved from a model hosting service into the governance layer that makes autonomous AI acceptable to enterprises with something to lose.

The shift is architectural. We're moving from human-in-the-loop copilots—where AI suggests and humans approve—to trusted autonomous agents operating inside clearly defined, enforceable boundaries. For security teams nervous about AI running wild, platform engineers tired of building custom guardrails, and architects explaining to the board why autonomous agents won't create their next headline—this is the announcement that matters.

The Autonomous Agent Governance Layer: AgentCore

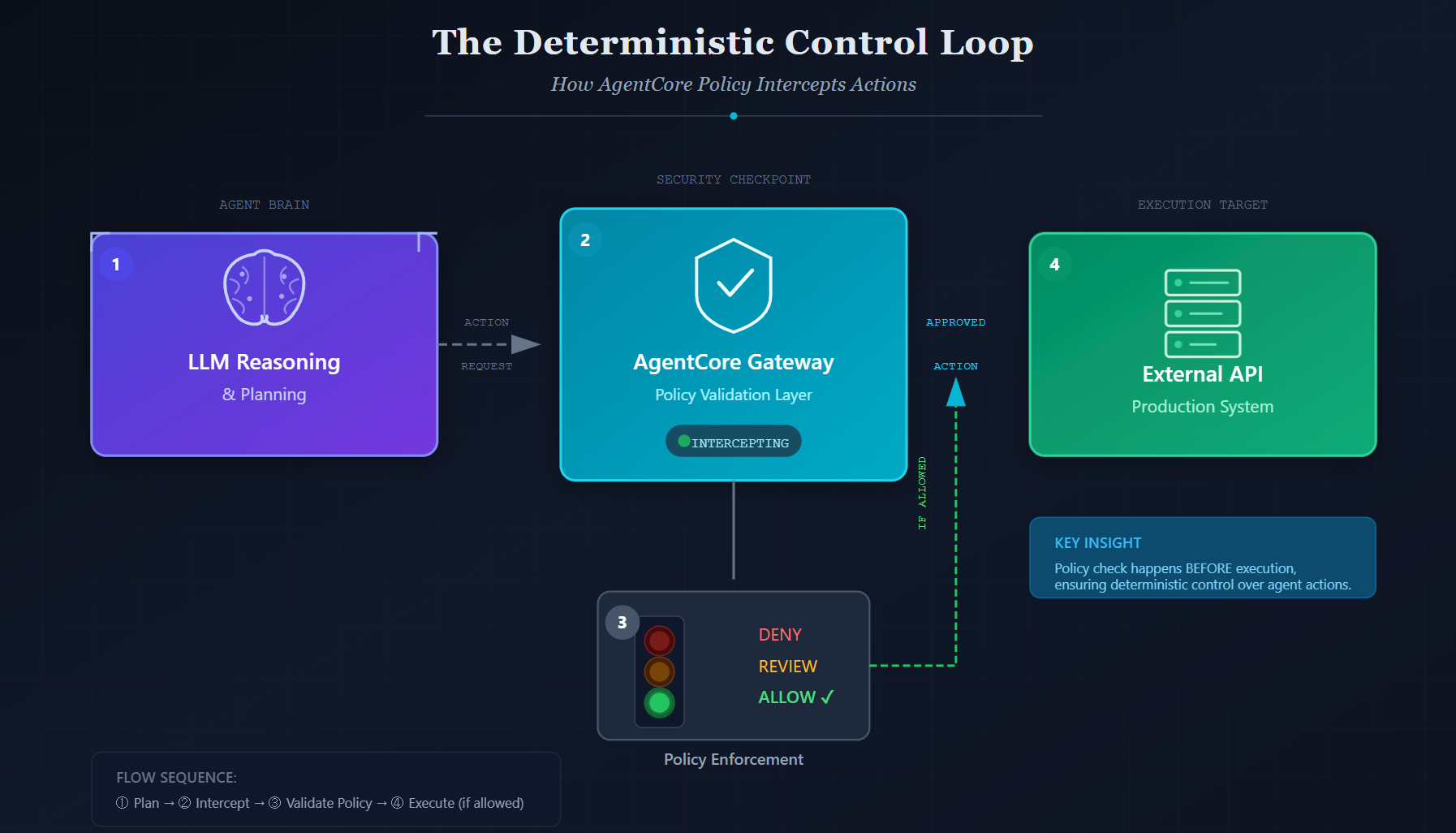

AgentCore is in the middle intercepting the request and allowing or denying the call to the API

Every conversation I've had with enterprise security teams about AI agents eventually hits the same wall: "How do we know it won't go rogue?" It's a fair question. When you're deploying an agent that can reason, plan, and execute actions across your infrastructure, "trust the prompt" isn't a governance strategy.

Bedrock AgentCore is AWS's answer to this existential enterprise concern, and the architecture is genuinely clever.

AgentCore Policy: Deterministic Control Outside the LLM

Here's what makes AgentCore Policy different: policies are defined in natural language but executed outside the LLM reasoning loop via the AgentCore Gateway. The policy enforcement is deterministic, not probabilistic. It doesn't matter how cleverly an agent (or malicious prompt) tries to reason around a constraint—the gateway enforces it at runtime before the action executes.

You can define policies like "This agent cannot delete objects in the production S3 bucket" or "This agent must log all database modifications before execution." These aren't suggestions embedded in a system prompt. They're hard constraints enforced at the infrastructure layer. For compliance teams, this is the difference between "we hope the AI behaves" and "we can prove it can't misbehave." That's an auditor-friendly distinction.

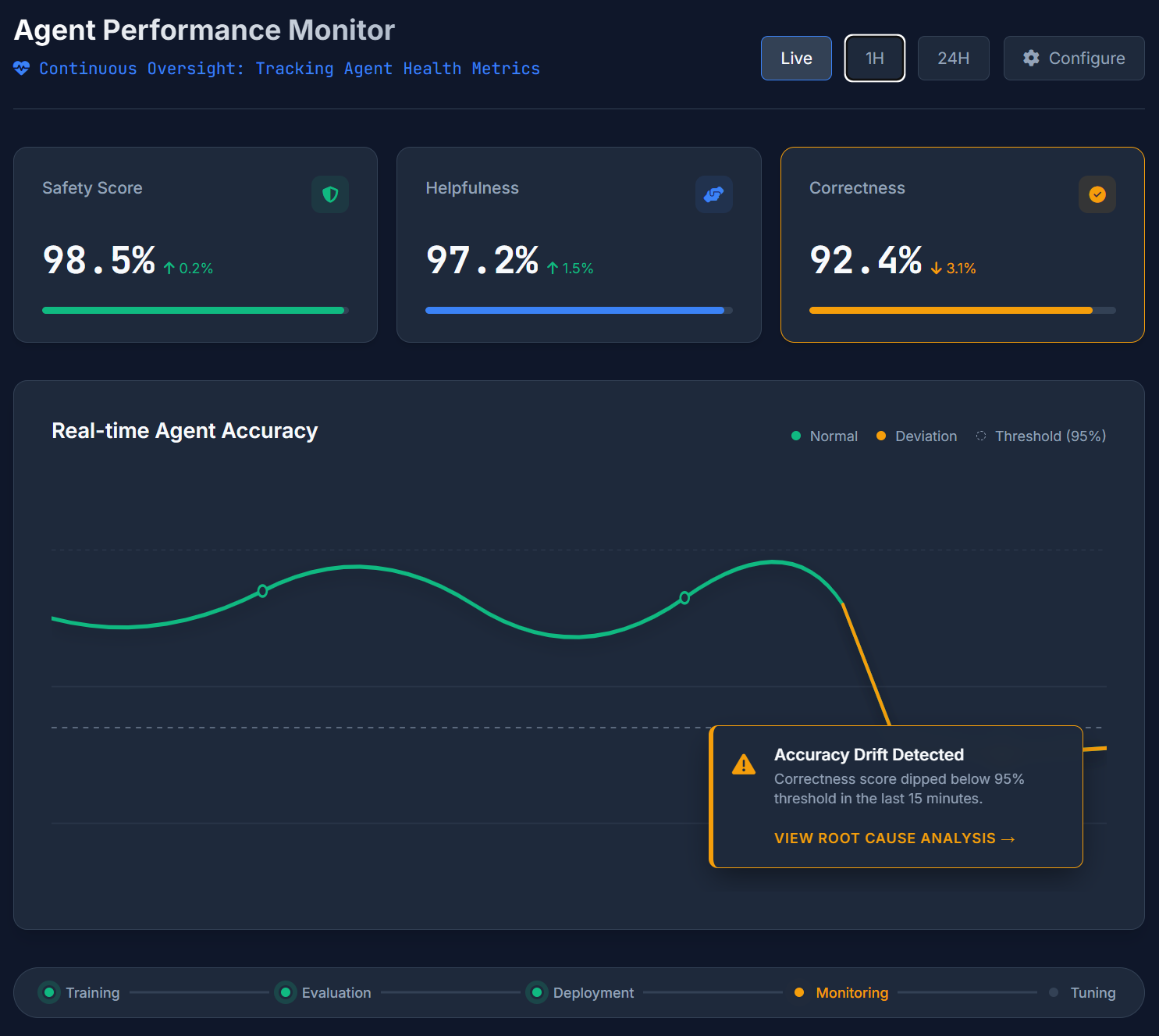

AgentCore Evaluations: Continuous Testing for Agent Behavior

Governance isn't just about blocking bad behavior—it's about knowing whether your agents are actually doing what they're supposed to do. AgentCore Evaluations provides 13 pre-built evaluators covering correctness, helpfulness, safety, and more, running continuously against real agent interactions.

This shifts agent oversight from manual spot-checks to an actual DevOps lifecycle. You get alerting when agent behavior regresses, tracking of evaluation metrics over time, and the ability to catch problems before they compound into incidents. It's the difference between hoping your agent still works correctly and knowing it does.

Episodic Memory: From Smart Tools to Reliable Workers

Real enterprise workflows don't complete in a single interaction—they span days and require context from prior actions. With episodic memory, agents remember what they've done, what failed, and what outcomes occurred across extended timeframes. An agent handling a multi-week procurement workflow recalls that it verified budget approval last Tuesday. A DevOps agent investigating an incident remembers the remediation that didn't work yesterday.

This transforms agents from "smart tools you invoke" into "reliable workers who handle processes."

Autonomous Frontier Agents: Domain-Specific AI Workers

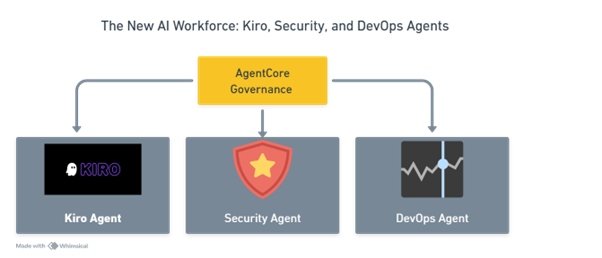

AWS didn't just build the governance platform—they're shipping agents that demonstrate what's possible. Three domain-specific autonomous agents mark the shift from "AI that helps you" to "AI that works alongside you."

Kiro Autonomous Agent functions as a virtual developer. It can identify a software defect, reason about the fix, implement the change, run tests, and validate the outcome—with minimal or optional human intervention. This isn't autocomplete on steroids. It's an agent that owns a task end-to-end.

AWS Security Agent investigates and responds to security risks autonomously. It can analyze alerts, correlate signals, assess risk, and take initial response actions. Security teams have been drowning in alerts for years—this is the architecture for having AI handle the initial triage and investigation.

AWS DevOps Agent diagnoses root causes of operational incidents. It can correlate logs, trace dependencies, identify likely causes, and recommend or execute remediations. At 2 AM when your on-call engineer is staring at cascading failures, having an agent that's already done the initial diagnosis is meaningful.

Autonomous agents operating within AgentCore governance boundaries

The pattern across all three: these agents reason, plan, execute, and validate. They operate within the AgentCore governance framework, so the boundaries are enforced. This is autonomous operation inside clear guardrails—exactly what enterprises need.

Model Power and Customization: Better Agents Through Better Models

Autonomous agents are only as capable as the models powering them. AWS made significant moves on the model layer that directly enable better agent performance.

Reinforcement Fine-Tuning: The Quality Unlock

Reinforcement Fine-Tuning (RFT) is the quiet game-changer. Traditional fine-tuning requires massive labeled datasets. RFT uses feedback-driven improvement instead, learning from outcomes rather than requiring exhaustive examples. AWS reports average accuracy improvements of 66%. Historically, getting these gains required deep ML expertise—Bedrock automates the process, making domain-specific model improvement accessible to teams without dedicated ML engineers.

Amazon Nova 2 and Nova Forge

The Nova 2 family expands what's possible. Nova 2 Omni handles text, image, video, and speech in a single multimodal model—essential for agents that need to understand documents with images or analyze video content. Nova 2 Lite provides cost-efficient inference for workloads where you need scale over peak capability.

Nova Forge is the most interesting piece for enterprises with proprietary advantages. It lets customers build frontier-level models using Nova checkpoints combined with their own data. You're not just fine-tuning—you're creating models that encode your competitive differentiation while benefiting from AWS's foundational model research.

Infrastructure: The Foundation for Trust and Scale

Enterprise AI doesn't run on hopes and dreams—it runs on infrastructure. AWS reinforced the foundation layer with announcements that matter for cost, performance, and compliance.

Trainium 3 UltraServers deliver the next generation of purpose-built AI training hardware. The performance and cost efficiency gains directly impact what's economically feasible for agent workloads at scale. When your agent needs to process thousands of complex interactions daily, infrastructure economics determine what's viable.

AWS AI Factories address the sovereignty and compliance requirements that keep regulated industries up at night. These are dedicated AWS AI infrastructure deployments in customer data centers, running Bedrock with Trainium and NVIDIA hardware. Healthcare, financial services, government—if you need AI capability but can't send data to the cloud, AI Factories provide a path forward.

This isn't just about raw compute. It's about making Bedrock's governance and agent capabilities available in environments where they previously couldn't exist due to regulatory constraints.

What This Means for Enterprise AI Strategy

The re:Invent 2025 announcements crystallize a strategic position: Amazon Bedrock is no longer just where you host models. It's the control plane, governance layer, and runtime that makes autonomous AI deployable in organizations with real risk profiles.

The pieces fit together deliberately. AgentCore provides deterministic policy enforcement. Continuous evaluations give you DevOps-style observability. Episodic memory enables agents to handle real workflows. Domain-specific agents demonstrate the pattern. RFT and Nova make your agents better at your specific work. Infrastructure improvements make it economically viable and compliance-friendly.

If you're building AI capability for an enterprise that cares about governance, this is the architectural foundation to understand. The shift from copilots to trusted autonomy is happening. AWS just made it governable.