Deep Learning Fundamentals: Neural Networks and Beyond

Welcome back! Today we're diving into Deep Learning - the technology that's revolutionizing AI and powering everything from voice assistants to self-driving cars. If Machine Learning is teaching computers to learn, Deep Learning is teaching them to learn like our brains do.

What Makes Deep Learning "Deep"?

Deep Learning is a subset of Machine Learning that uses artificial neural networks with multiple layers (hence "deep"). It's inspired by the structure and function of the human brain, using interconnected nodes that process information in ways remarkably similar to our neurons.

The "deep" refers to the multiple hidden layers between input and output - like having many levels of understanding between seeing an image and recognizing it as a cat.

Neural Networks: The Building Blocks

Raw data enters, simple patterns detected, combines features, forms complex concepts and makes final prediction

Understanding the Architecture

Picture a neural network like a multi-story office building where information flows from the ground floor to the top:

Input Layer (Ground Floor)

Receives raw data (pixels of an image, words in a sentence)

Each input is like a different entrance door

Hidden Layers (Middle Floors)

Where the magic happens

Each layer extracts different features

Early layers: Simple patterns (edges, colors)

Deeper layers: Complex concepts (faces, objects)

Output Layer (Top Floor)

Final decision or prediction

"This is a cat with 97% confidence"

How Neural Networks Learn

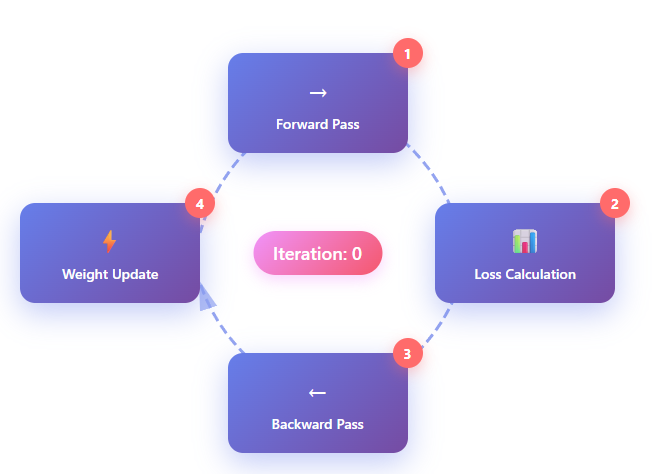

Here's what happens when we train a neural network:

The training loop is the core process that enables neural networks to learn from data. Each iteration through the loop slightly improves the network's ability to make accurate predictions.

Forward Pass: Input data flows through the network layer by layer. Each neuron performs calculations using current weights and biases, passing results forward until we get a prediction at the output layer.

Compare Output/Loss Calculation: Compare the network's prediction with the actual correct answer. The loss function quantifies how wrong the prediction was - a higher loss means a bigger error that needs correction.

Backward Pass (Backpropagation): Calculate how much each weight contributed to the error. Using calculus (gradient descent), determine which direction and by how much each weight should be adjusted to reduce the loss.

Repeat/Weight Update: Adjust all weights and biases based on the gradients calculated during backpropagation. Small adjustments accumulate over many iterations, gradually improving the network's accuracy. Repeat thousands or millions of times until accurate

Think of it like tuning a massive orchestra - each adjustment makes the overall performance slightly better until you achieve harmony.

Why Deep Learning Is Revolutionary

Pattern Recognition at Scale

Deep Learning excels at finding patterns humans might miss, transforming entire industries in the process. In computer vision, deep learning models are now detecting cancer in medical images with accuracy that surpasses experienced radiologists, potentially saving countless lives through earlier detection. The technology's ability to understand natural language has evolved to capture context and nuance in text that was previously impossible for machines to grasp, enabling everything from sophisticated chatbots to automated content moderation. Speech recognition has reached a point where converting voice to text happens with incredible accuracy, making voice interfaces a natural part of our daily interactions. Perhaps most remarkably, generative AI powered by deep learning is now creating entirely new content, from photorealistic images to coherent long-form text, pushing the boundaries of what we thought machines could create.

Deep Learning Revolution

Pattern Recognition at Scale

Medical Imaging AI

Deep learning models detect diseases in medical scans with accuracy surpassing human specialists, enabling earlier diagnosis and saving lives.

Context-Aware Chatbots

Natural language models understand context and nuance, maintaining coherent conversations and remembering important details throughout the interaction.

Speech Recognition

Converting voice to text with incredible accuracy, enabling natural voice interfaces and making technology accessible to everyone.

Generative AI Art

AI models create entirely new images, artwork, and designs from text descriptions, pushing the boundaries of creative expression.

Automatic Feature Engineering

The real revolution in deep learning lies in how it fundamentally changes the development process. Traditional machine learning requires humans to manually identify and extract important features from data, a time-consuming process that requires deep domain expertise. Deep learning flips this paradigm completely - the network learns what features matter automatically through its training process. Consider facial recognition as an example: in traditional approaches, engineers would need to manually measure and define features like eye distance, nose shape, and jaw structure, then write algorithms to detect these specific measurements. Deep learning models, by contrast, figure out the relevant features entirely on their own, often discovering subtle patterns and relationships that human engineers would never have thought to look for. This automatic feature discovery is what allows deep learning to tackle problems that were previously considered impossible for computers.

Key Deep Learning Applications

Computer Vision

Autonomous driving- top left. Top right- medical imaging. Bottom left-facial recognition. Bottom right-quality control

Deep learning has completely transformed how computers "see" and interpret visual information. Object detection systems can now identify and precisely locate multiple objects within complex images, enabling applications from autonomous vehicles navigating city streets to retail systems tracking inventory in real-time. Image classification has evolved beyond simple categorization to understanding subtle differences and contexts within images, powering everything from medical diagnosis to content moderation at scale. Facial recognition technology has become so sophisticated that it can identify specific individuals in crowds or verify identity with near-perfect accuracy, though this raises important privacy considerations. In medical imaging, deep learning models are detecting diseases from X-rays, MRIs, and CT scans with remarkable precision, often catching early-stage conditions that human eyes might miss. Industrial quality control has been revolutionized as well, with deep learning systems finding microscopic defects in manufacturing processes that would be impossible to catch manually. AWS makes these capabilities accessible through services like Amazon Rekognition for general image and video analysis, and Amazon Lookout for Vision for specialized defect detection in industrial settings.

Key Take Aways

Seeing Computers:

Object Detection: Identifying and locating objects in images

Image Classification: Categorizing entire images

Facial Recognition: Identifying specific individuals

Medical Imaging: Detecting diseases from scans

Industrial Quality Control: Finding defects in manufacturing

AWS Services:

Amazon Rekognition - pre-trained for images and video

Amazon Lookout for Vision - specialized for defect detection

Natural Language Processing (NLP)

Understanding human language in all its complexity has long been one of AI's greatest challenges, and deep learning has made breakthrough after breakthrough in this domain. Sentiment analysis powered by deep learning can now understand not just whether text is positive or negative, but can detect subtle emotions, sarcasm, and context-dependent meaning. Language translation has reached a level where real-time translation between languages preserves not just meaning but often cultural nuances and idiomatic expressions. Text generation capabilities have exploded, with models creating human-like text that can adapt to different styles, tones, and purposes. Question answering systems now understand complex queries and can provide relevant responses by comprehending context across lengthy documents. Document understanding has evolved to extract meaning from complex documents, parsing everything from legal contracts to research papers to extract key information automatically. AWS provides these capabilities through services like Amazon Comprehend for text analysis, Amazon Translate for language translation, and Amazon Kendra for intelligent enterprise search that understands the intent behind queries.

Natural Language Processing Pipeline

From Raw Text to Actionable Insights with AWS AI Services

"The new product launch was incredibly successful. Sales exceeded expectations by 150% in the first quarter."

Tokenization

Breaking text into words & subwords

Embedding

Converting tokens to vectors

Transformer Layers

Multi-head attention & processing

Sentiment Analysis

✨ Highly positive sentiment detected

Translation

🇪🇸 Translated to Spanish

Entity Extraction

Key Take Aways

Human language is complex

Sentiment Analysis: Understanding emotions in text

Language Translation: Real-time translation between languages

Text Generation: Creating human-like text

Question Answering: Understanding and responding to queries

Document Understanding: Extracting meaning from complex documents

AWS Services:

Amazon Comprehend - text analysis

Amazon Translate - language translation

Amazon Kendra - intelligent enterprise search

Speech and Audio

The transformation of speech and audio processing through deep learning has made voice interfaces a natural part of our digital lives. Speech recognition technology now converts voice to text with such accuracy that dictation has become a viable alternative to typing for many professionals, while voice commands control everything from our phones to our homes. Speech synthesis has advanced to the point where text-to-speech systems produce voices that are nearly indistinguishable from human speech, complete with appropriate emphasis and emotion. Speaker identification can recognize who's talking even in noisy environments or when multiple people are speaking, enabling personalized experiences and enhanced security. The field has even extended to creative applications like music generation, where deep learning models compose original pieces in various styles. AWS offers these capabilities through Amazon Transcribe for speech-to-text conversion, Amazon Polly for natural-sounding text-to-speech, and Amazon Lex for building conversational interfaces - the same technology that powers Alexa's ability to understand and respond to millions of users daily.

Key Take Aways

Converting between speech and text:

Speech Recognition: Voice to text

Speech Synthesis: Text to voice

Speaker Identification: Recognizing who's talking

Music Generation: Creating original compositions

AWS Services:

Amazon Transcribe - speech to text

Amazon Polly - text to speech

Amazon Lex - conversational interfaces (powers Alexa)

Generative AI: The New Frontier

This is where deep learning gets really exciting, pushing beyond analysis and recognition into the realm of creation. Large Language Models (LLMs) like GPT and Claude use transformer architecture to understand and generate human-like text, powering everything from sophisticated chatbots that can maintain context over long conversations to code generation tools that can write functional programs from natural language descriptions. These models don't just follow templates - they understand context, maintain consistency, and can adopt different writing styles and tones. Image generation models like DALL-E and Stable Diffusion have captured public imagination by creating images from text descriptions, using diffusion models or GANs (Generative Adversarial Networks) to produce everything from photorealistic scenes to artistic interpretations that would fool most observers. The technology has evolved so rapidly that what seemed like science fiction just a few years ago is now accessible to anyone with an internet connection. Multimodal models represent the cutting edge, combining text and vision understanding to enable AI systems that can answer questions about images, generate images from complex multi-paragraph descriptions, or even create videos from text prompts. These breakthroughs are all powered by deep learning's fundamental ability to understand and model complex patterns in massive datasets, learning representations of the world that can be recombined in novel ways to create something genuinely new.

🔮 How the AI Magic Happens: Simplified Transformer Architecture

Key Take Aways

Large Language Models (LLMs) like GPT and Claude use transformer architecture to understand and generate human-like text. They power chatbots, code generation, and creative writing.

Image Generation models like DALL-E and Stable Diffusion create images from text descriptions. They use diffusion models or GANs (Generative Adversarial Networks) to generate photorealistic or artistic images.

Multimodal Models combine text and vision understanding, allowing AI to answer questions about images or generate images from complex descriptions.

These breakthroughs are all powered by deep learning's ability to understand complex patterns in massive datasets.

Deep Learning on AWS

Why AWS for Deep Learning?

Deep learning has specific requirements that AWS addresses:

Computational Power: Training deep learning models requires serious GPU power. A model that would take months on your laptop trains in hours on AWS.

Scalability: Need to process millions of images? AWS can scale up instantly.

Pre-trained Models: Why start from scratch? AWS offers models already trained on millions of examples.

Managed Services: Focus on your problem, not managing GPU clusters.

Key AWS Deep Learning Services

Amazon SageMaker

Build, train, and deploy custom deep learning models

Built-in algorithms and framework support (TensorFlow, PyTorch, MXNet)

Distributed training for large models

Automatic model tuning to find the best hyperparameters

AWS Deep Learning AMIs

Pre-configured environments with all frameworks installed

Optimized for AWS infrastructure

Start training in minutes, not hours of setup

Pre-trained Services Instead of building from scratch, use:

Rekognition: Computer vision for images and video

Comprehend: Natural language processing

Textract: Extract text and data from documents

Forecast: Time-series predictions using deep learning

Personalize: Deep learning-powered recommendations

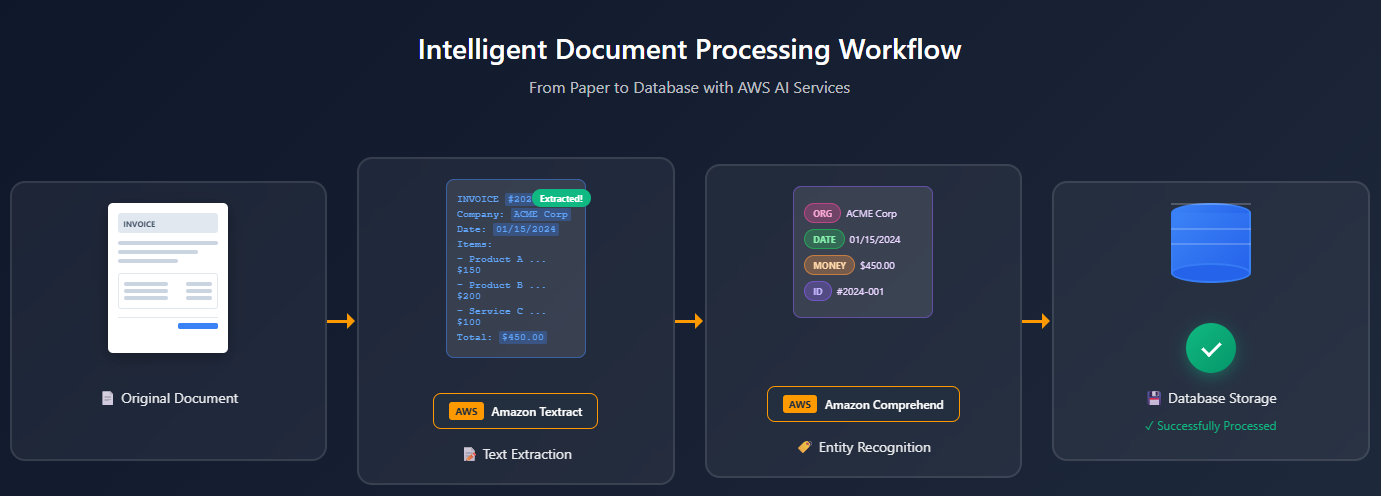

Real-World Example: Intelligent Document Processing

Let's see how deep learning powers a complete solution:

Imagine processing thousands of invoices automatically:

Document Capture: User uploads invoice photo

Text Extraction: Amazon Textract uses deep learning to extract text, even from handwritten notes

Layout Understanding: Textract identifies tables, forms, and key-value pairs

Content Analysis: Amazon Comprehend understands the context - vendor names, amounts, dates

Data Validation: Check extracted data against business rules

Database Entry: Automatically populate your accounting system

Multiple deep learning models working together seamlessly! This same workflow handles receipts, contracts, medical forms - any document type.

Challenges and Considerations

Data Requirements

Deep learning is data-hungry:

Volume: Needs thousands to millions of examples

Quality: Clean, labeled data is essential

Diversity: Must represent all scenarios

Tip: Start with pre-trained models when possible. Transfer learning lets you adapt existing models with much less data.

Computational Costs

Training deep learning models can be expensive:

GPU instances cost $1-30+ per hour

Large models can take days to train

Costs multiply with experimentation

Cost Optimization:

Use spot instances (up to 90% savings)

Start with smaller models

Leverage pre-trained services

Monitor and stop unused training jobs

The Black Box Problem

Deep learning models are notoriously hard to interpret:

Millions of parameters make decisions opaque

Difficult to explain why a specific prediction was made

Challenge for regulated industries

Solutions:

Use AWS services like SageMaker Clarify for model explanation

Choose simpler models when interpretability is crucial

Document model behavior extensively

When Deep Learning Isn't the Answer

Despite the hype, deep learning isn't always the best solution:

Small datasets: Traditional ML often works better

Simple patterns: Don't use a sledgehammer for a nail

Interpretability required: Simpler models may be necessary

Resource constraints: Edge devices may not support large models

Key Takeaways for AWS AI Practitioner

Deep learning mimics brain structure with interconnected layers of artificial neurons

Automatic feature learning is the superpower - no manual feature engineering needed

Data and compute requirements are significant - thousands of examples and GPU power

AWS provides the full stack:

Infrastructure (GPUs, storage)

Platforms (SageMaker)

Pre-trained services (Rekognition, Comprehend, Textract)

Start with pre-trained when possible - cheaper and faster than training from scratch

Transfer learning is your friend - adapt existing models to your needs

Consider computational costs - deep learning can be expensive at scale

Generative AI is deep learning's latest triumph - creating new content, not just analyzing

Practical Tips for Getting Started

Starting your deep learning journey doesn't mean reinventing the wheel. Always check first if AWS has a pre-trained service for your use case. Need sentiment analysis? Amazon Comprehend is ready to go. Object detection? Amazon Rekognition has you covered. These services save months of development time and thousands in training costs.

When pre-built services don't quite fit, transfer learning becomes your secret weapon. Take a model pre-trained on millions of images (like those trained on ImageNet) and fine-tune it on your specific data. This approach can achieve excellent results with just hundreds of examples instead of the millions you'd need to train from scratch.

Cost monitoring should be part of your workflow from day one. Set up billing alerts before you start any training jobs. A forgotten training job can cost thousands - I've heard horror stories of weekend experiments resulting in five-figure bills. Use SageMaker's automatic stopping conditions to prevent runaway costs.

Keep your approach simple and iterative. Begin with pre-trained services to establish a baseline, then move to SageMaker's built-in algorithms if you need more control. Only build custom models when absolutely necessary - they're expensive to develop and maintain.

Finally, remember that data quality trumps quantity every time. Ten thousand clean, well-labeled examples will outperform 100,000 messy ones. Invest time in data preparation - it's not glamorous, but it's the foundation of successful deep learning.

What's Next?

In our next post, we'll explore Generative AI and Foundation Models in detail - the technology behind ChatGPT, DALL-E, and Amazon Bedrock. We'll see how these massive models are trained, how they generate new content, and how AWS makes them accessible to developers.

Get ready to understand:

What makes foundation models different

How transformer architecture revolutionized AI

Prompt engineering techniques

Fine-tuning vs RAG (Retrieval Augmented Generation)

Amazon Bedrock's role in democratizing generative AI

Resources for Deeper Learning (pun intended):

Questions about neural networks? Confused about when to use deep learning vs traditional ML? Drop a comment below - remember, we're all learning together on this AWS AI journey!