AI Fundamentals - What is artifical intelligence

AI Fundamentals: What Is Artificial Intelligence Really?

Welcome back to my AWS AI Practitioner journey! Before we dive into the specifics of machine learning and neural networks, let's start with the big question: What exactly is AI? Spoiler alert: it's not about creating robot overlords or sentient machines (at least not yet). It's about solving problems we typically associate with human intelligence.

Defining Artificial Intelligence

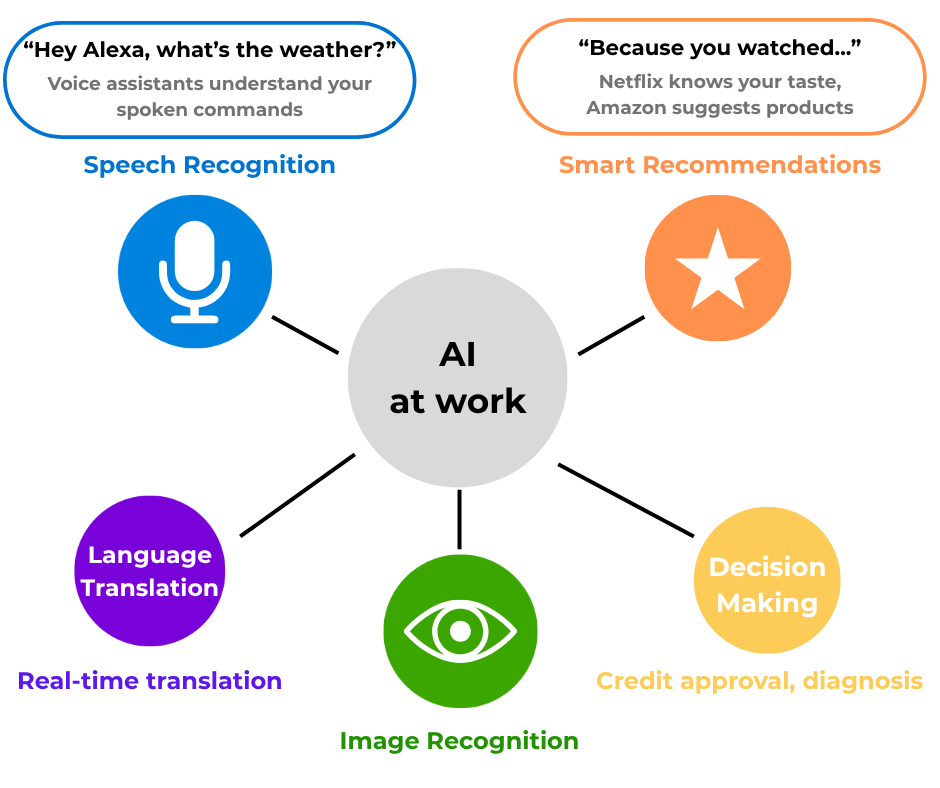

At its core, Artificial Intelligence (AI) refers to techniques that enable computers to mimic human intelligence. Think about what makes us "intelligent" - we can recognize faces, understand language, make decisions, solve problems, and learn from experience. AI is about teaching computers to do these same tasks.

Think of it like this. If a computer can do something that normally requires human knowledge - like understanding what you're saying, recognizing your cat in a photo, or recommending your next Netflix binge - that's AI in action.

The Technical Definition

More formally, AI encompasses:

Problem-solving capabilities that traditionally required human cognition

Pattern recognition in complex data

Decision-making based on inputs and learned experiences

Natural language understanding and generation

Visual perception and interpretation

A Brief History: How We Got Here

Understanding where AI came from helps us appreciate where it's going. Here's the journey:

The Birth of AI (1940s-1950s)

1943: Warren McCulloch and Walter Pitts created a model of artificial neurons. Yes, we've been trying to mimic brains since the 1940s!

1950: Alan Turing publishes "Computing Machinery and Intelligence" and proposes the famous Turing Test. His question: "Can machines think?" Still debating this one at dinner parties.

1956: The Dartmouth Conference officially coins the term "Artificial Intelligence." A group of scientists gathered to discuss "thinking machines" - and AI was born.

Early Enthusiasm and First Winter (1960s-1970s)

The 1960s saw huge optimism. Researchers created:

ELIZA (1966): The first chatbot! It could hold conversations (sort of)

General problem solvers and theorem provers

But then reality hit. Computers were expensive, slow, and couldn't handle real-world complexity. Funding dried up in what we call the first "AI Winter."

Revival and Second Winter (1980s-1990s)

1980s: Expert systems brought AI back! These were rule-based systems that could make decisions in specific domains. Think medical diagnosis systems with thousands of "if-then" rules.

1997: IBM's Deep Blue defeats world chess champion Garry Kasparov. The world takes notice.

But again, limitations emerged. Expert systems were brittle, expensive to maintain, and couldn't learn. Cue AI Winter #2.

The Deep Learning Revolution (2000s-2010s)

This is where things get exciting:

2006: Geoffrey Hinton shows how to train deep neural networks effectively. Game changer!

2012: AlexNet wins the ImageNet competition by a huge margin using deep learning. Suddenly, computers could "see" better than ever.

2016: Google's AlphaGo defeats world Go champion Lee Sedol. Go is way more complex than chess - this was huge.

The Current Era (2020s)

We're living in AI's golden age:

2020: GPT-3 shows language models can write, code, and create 2022: ChatGPT launches and breaks the internet (figuratively) 2023: Generative AI explodes - DALL-E, Midjourney, Stable Diffusion create art 2024-2025: AI becomes embedded everywhere - from your IDE to your toaster (okay, maybe not toasters yet)

Types of AI: Let's Get Practical

When people talk about AI, they're usually referring to one of these categories:

1. Narrow AI (What We Have Now)

Also called "Weak AI" - but don't let the name fool you. This is AI designed for specific tasks:

Virtual assistants (Alexa, Siri)

Recommendation engines (Netflix, Amazon)

Facial recognition systems (Unlocking your phone, tagging on social media)

Language translators

Game-playing AI (Chess, Go)

Autonomous vehicles

These systems are incredibly good at their specific tasks but can't generalize. Your chess AI can't suddenly start driving a car.

2. General AI (The Holy Grail)

Also called "Strong AI" or AGI (Artificial General Intelligence). This would be AI that matches human intelligence across all domains. It could learn any task, reason abstractly, and transfer knowledge between domains.

Current status: We're not there yet. Not even close. Despite what sci-fi movies suggest!

3. Super AI (The Far Future?)

Theoretical AI that surpasses human intelligence in all aspects. This is pure speculation and the stuff of philosophy debates and Hollywood blockbusters.

Real-World AI Use Cases That Actually Matter

Let's move from theory to practice. Here's where AI is making a real difference today:

Healthcare

Medical imaging: AI detects cancer in mammograms and CT scans, often better than human radiologists

Drug discovery: AI identifies potential new medicines in months instead of years

Personalized treatment: AI analyzes patient data to recommend tailored treatment plans

Mental health: AI chatbots provide 24/7 support and early intervention

Finance

Fraud detection: AI spots unusual patterns in milliseconds

Risk assessment: More accurate credit scoring and loan approvals

Algorithmic trading: AI makes split-second market decisions

Customer service: AI chatbots handle routine banking queries

Transportation

Autonomous vehicles: From Tesla's Autopilot to fully self-driving cars (coming soon™)

Traffic optimization: AI adjusts traffic lights in real-time to reduce congestion

Predictive maintenance: AI predicts when vehicles need service before they break down

Route optimization: Your Uber arrives faster thanks to AI routing

Retail and E-commerce

Personalized recommendations: "You might also like..." (and you probably will)

Inventory management: AI predicts demand and optimizes stock levels

Visual search: Snap a photo, find the product

Dynamic pricing: Prices adjust based on demand, competition, and your browsing history

Education

Personalized learning: AI adapts to each student's pace and style

Automated grading: Frees teachers to focus on teaching

Intelligent tutoring: 24/7 help for students

Predictive analytics: Identifies students at risk of dropping out

Entertainment

Content creation: AI writes scripts, composes music, creates art

Game development: NPCs with more realistic behaviors

Content recommendation: Your "For You" page knows you scary well

Special effects: AI generates realistic CGI faster and cheaper

Key AI Concepts You Need to Know

Before we dive deeper in future posts, here are the fundamental concepts that power AI:

1. Data: The Fuel of AI

AI systems learn from data. The more quality data, the better the AI. Think of data as the textbook AI uses to study - if the textbook is full of errors or missing pages, the student won't learn properly.

Garbage in, garbage out is more than just a saying - it's a fundamental law of AI. If you train an AI system on flawed data, it will produce flawed results. Imagine training a facial recognition system using only photos taken in bright sunlight. When deployed in a dimly lit security camera setting, it would fail miserably. This is why data scientists spend 80% of their time cleaning and preparing data.

Bias in, bias out is equally critical and has real-world consequences. AI reflects the biases in its training data, sometimes amplifying them. If a hiring AI is trained on historical data where most engineers were male, it might unfairly favor male candidates. Amazon famously scrapped an AI recruiting tool in 2018 for this exact reason. This isn't the AI being malicious - it's simply finding and replicating patterns in the data it was given.

More isn't always better when it comes to data. Quality trumps quantity every time. A million blurry, mislabeled images won't train a better model than ten thousand clear, correctly labeled ones. It's like studying for an exam - reading one good textbook thoroughly is better than skimming through dozens of poor-quality notes.

2. Algorithms: The Brains

Algorithms are the mathematical recipes that help AI learn patterns. They're the instructions that tell the computer how to find meaningful relationships in data.

Supervised learning is like learning with a teacher who provides correct answers. The algorithm learns from labeled examples - emails marked as "spam" or "not spam," X-rays labeled as "tumor" or "healthy." Once trained, it can label new, unseen examples. It's the most common type because many business problems have historical data with known outcomes. Think credit decisions (approved/denied) or sales forecasting (using past sales data).

Unsupervised learning is like exploring a new city without a map. The algorithm finds hidden patterns in data without being told what to look for. It might discover that your customers naturally fall into three groups: bargain hunters, premium buyers, and bulk purchasers - insights you didn't know existed. This is powerful for customer segmentation, anomaly detection (finding unusual transactions), and discovering topics in documents.

Reinforcement learning is learning by doing, like a child learning to ride a bike. The algorithm tries different actions, receives rewards for good outcomes and penalties for bad ones, and gradually learns the best strategy. This powers game-playing AI (like AlphaGo), robotic control, and autonomous vehicles. The key is having a clear reward signal and the ability to practice - sometimes millions of times in simulation.

3. Computing Power: The Muscle

Modern AI needs serious computational power because it performs billions of calculations to find patterns in data. The breakthroughs in AI over the last decade weren't just algorithmic - they were enabled by massive increases in computing power.

GPUs (Graphics Processing Units) were originally designed for video games but turned out to be perfect for AI. While a CPU (your computer's main processor) is like a brilliant professor who solves problems one at a time, a GPU is like having thousands of students who can work on simple problems simultaneously. Training a modern language model on CPUs might take years; on GPUs, it takes weeks. NVIDIA became one of the world's most valuable companies by recognizing this early.

Cloud computing democratized AI by providing massive scale without massive investment. Before cloud, only tech giants could afford the server farms needed for AI. Now, a startup can rent thousands of GPUs for a few hours, train their model, and shut them down. AWS, Azure, and Google Cloud compete fiercely in this space, constantly launching more powerful AI-optimized instances.

Edge computing brings AI directly to your device for speed and privacy. Instead of sending your voice to the cloud every time you say "Hey Siri," your phone can process it locally. This reduces latency (no round trip to the cloud), works offline, and keeps your data private. It's why your phone can now blur backgrounds in video calls or translate languages in real-time without an internet connection.

4. Feedback Loops: The Teacher

AI improves through feedback, creating a cycle of continuous learning. This is what separates modern AI from traditional software - it gets better over time.

Training is the initial learning phase where the AI studies examples and finds patterns. Like a medical student studying thousands of case histories, the AI builds its initial understanding. But just like that student, it needs to be tested to ensure it truly learned and didn't just memorize.

Validation is testing on new data the model hasn't seen before. This catches "overfitting" - when a model memorizes training data instead of learning generalizable patterns. It's like a student who memorized practice exam answers but can't solve slightly different problems on the real test. Validation data acts as a practice test, helping us tune the model before the real exam.

Production feedback is where AI systems truly shine - they learn from real-world use. Every time you mark an email as spam that Gmail missed, you're providing feedback that improves the model. Netflix recommendations get better as you watch more shows. Tesla's Autopilot improves from millions of miles of driving data. This continuous learning loop is why AI services get smarter over time, unlike traditional software that remains static until manually updated.

The AI Landscape Today

As we prepare for the AWS AI Practitioner exam, it's crucial to understand the current AI ecosystem:

Major Players

Tech Giants: Google, Amazon, Microsoft, Meta, Apple

AI-First Companies: OpenAI, Anthropic, Stability AI

Hardware Leaders: NVIDIA (those precious GPUs!), AMD, Intel

Cloud Providers: AWS, Azure, Google Cloud (where AI happens at scale)

Hot Trends

Generative AI: Creating new content (text, images, code, music)

Large Language Models: GPT-4, Claude, Gemini understanding and generating human language

Multimodal AI: Systems that work with text, images, and audio together

AI Ethics: Ensuring AI is fair, transparent, and beneficial

Edge AI: Running AI directly on devices for privacy and speed

Why This Matters for AWS AI Practitioner

Understanding these fundamentals is crucial because:

AWS builds on these concepts: Every AWS AI service implements these principles

Better decision-making: You'll know which AWS service fits your use case

Cost optimization: Understanding AI helps you choose efficient solutions

Real-world application: You'll bridge theory and practice effectively

Common Misconceptions About AI

Let's bust some myths:

❌ "AI will replace all jobs"

Reality: AI augments human capabilities. It's changing jobs, not eliminating them entirely. New roles are emerging (prompt engineer, anyone?).

❌ "AI is sentient/conscious"

Reality: Current AI is pattern matching at scale. It's not self-aware, despite convincing conversations.

❌ "AI is always right"

Reality: AI makes mistakes, can be biased, and has limitations. Always verify critical decisions.

❌ "AI is too complex for non-techies"

Reality: Modern AI tools are becoming user-friendly. You don't need a PhD to use AI effectively.

Your AI Journey Checklist

As you embark on learning AI for AWS, remember:

[ ] AI is about solving human-like problems with computers

[ ] We're in the era of Narrow AI - powerful but specialized

[ ] Data quality is crucial for AI success

[ ] AI is already transforming every industry

[ ] Understanding fundamentals helps you use AWS AI services better

What's Next?

Now that we understand what AI is and where it came from, we're ready to dive deeper. In our next post, we'll explore Machine Learning - the engine that powers modern AI. We'll look at how computers actually learn from data and the different approaches they use.

Get ready to understand:

How machines learn without explicit programming

The difference between supervised, unsupervised, and reinforcement learning

Why your Netflix recommendations are so eerily accurate

How to think about ML in the context of AWS services

Key Takeaways

AI is about mimicking human intelligence - not creating sentient beings

We've been at this for 70+ years - overnight success takes decades

Current AI is narrow but powerful - excellent at specific tasks

AI is already everywhere - from healthcare to entertainment

Understanding AI helps you leverage AWS - better decisions, better solutions

Remember, we're all learning together. AI might seem complex, but at its heart, it's about teaching computers to be helpful in human-like ways. And with AWS making these tools accessible, we're all capable of building AI-powered solutions.

Ready to continue the journey? Let's demystify AI together, one concept at a time!

Study Resources: